Latest News

PROBLEMS IN THE IMPLEMENTATION OF AI IN ARBRITRATION

- Policy Implication of the use of AI

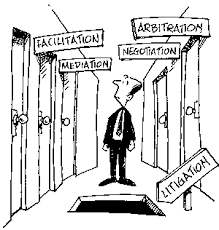

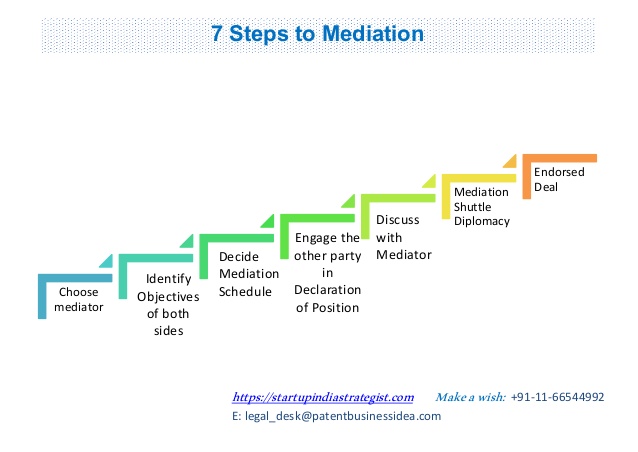

Understandably, the prospect of machine arbitration raises a multitude of questions. The main question being whether it would be legally possible in the current legal framework?

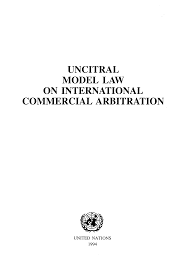

The arbitration framework does not categorically rule out the use of technology in arbitral proceedings.

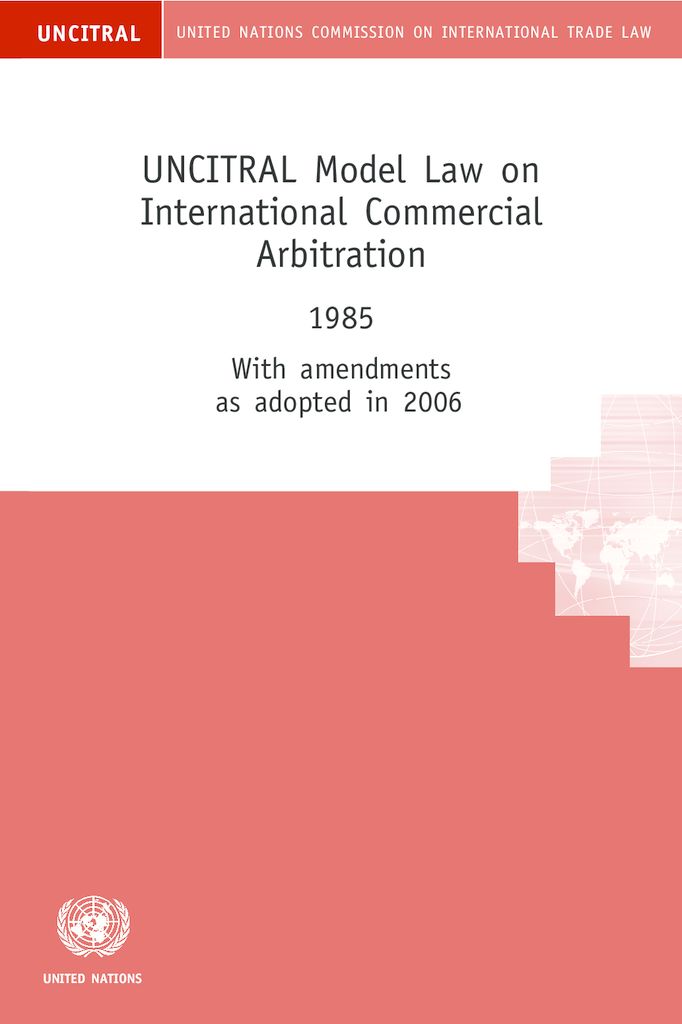

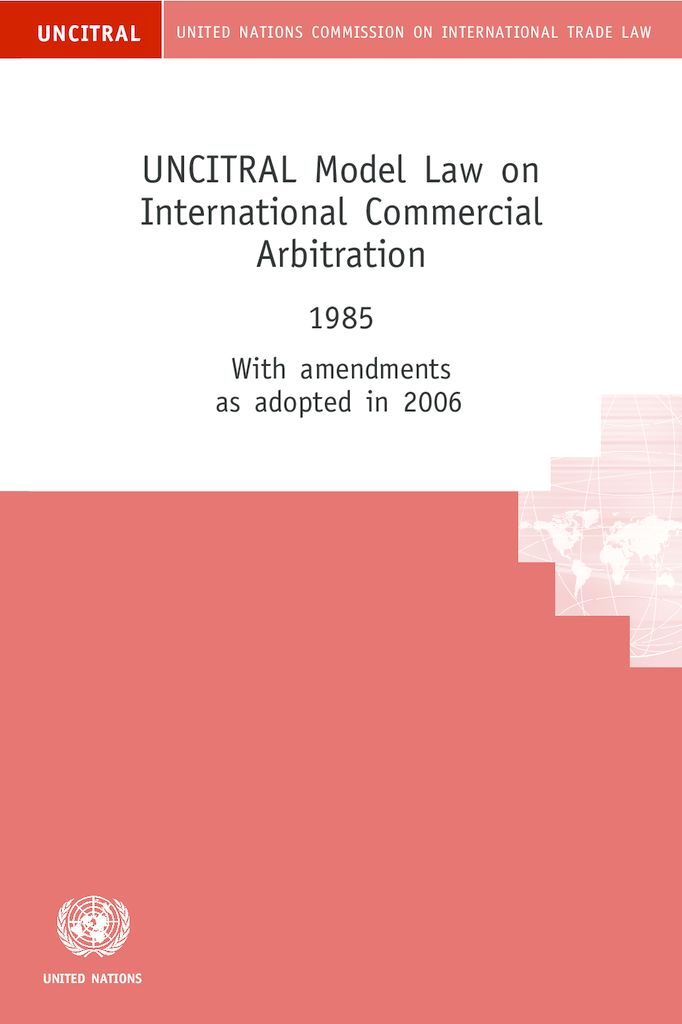

Art19(1) of the UNCITRAL Model Law on International Commercial Arbitration states that “subject to the provisions of this Law, the parties are free to agree on the procedure to be followed by the arbitral tribunal in conducting proceedings”. Art 19(2) says that “failing such agreement, the arbitral tribunal may, subject to the provisions of this Law, conduct the arbitration in such a manner as it considers appropriate”, and also has “the power to determine the admissibility, relevance, materiality and weight of any evidence”.

- Data Privacy Concerns in the use of AI

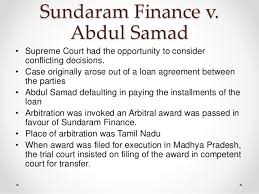

Arbitration is a confidential process. In some special cases, such as in international commercial arbitration, arbitral awards in commercial cases are not published.Thus, when there is so much sharing of data happening in AI, it will be interesting to see how the intrinsic character of Arbitration will be preserved.

- Ethical considerations in the use of AI

Arbitration practitioners could raise ethical reasons because of the absence of human qualities (e.g.: emotions) or due process defenses based on the so-called “black box”, which refers to the impossibility of directly explaining the results or predictions of the AI system.

All the jurisdictions across the world have provisions for a fair trial. In the present interpretation of this clause, it is meant that the proceedings are conducted by a human because humans combine strict applications of the law with more subtle considerations of equity. It is tough for anyone would accept the legitimacy of robots as judges or arbitrators because they’re not human, they don’t have a heart, and they don’t apply equity.

- Lack of explainability in AI tools

Firstly, the problem of lack of explainability in AI tools appears when the developers and implementers of these systems cannot explain how the program reaches a conclusion or a prediction. This is known as black-box systems and occurs especially within Deep Learning programs. This is problematic since as AI-enabled systems are becoming critical to life, death, and personal wellness, thee need to trust these AI-based systems is paramount.

- Lack of neutrality

AI may not be as “objective” and “neutral” as it is believed. AI systems are trained, fed, and operated with data sets. The problem arises when the data used for training are themselves biased, which may result in AI systems becoming a vehicle to reproduce human biases and prejudices. Similarly, AI systems used to assist the decision-making of arbitral tribunals could reproduce the trends that inhabit the cases entered as training data. Critical questions would be: Which cases will be used for this purpose? Who will choose them? How will diversity be guaranteed to take into account that many cases are kept confidential? In other words, a worldview or set of values that inadvertently inhabit previous cases and that are used to train the programs can influence how these systems behave and the decisions they make. For these reasons, the use of AI has the potential to affect the neutrality and equality between the parties, which are essential elements for maintaining the legitimacy of the International Arbitration regime.

- AI

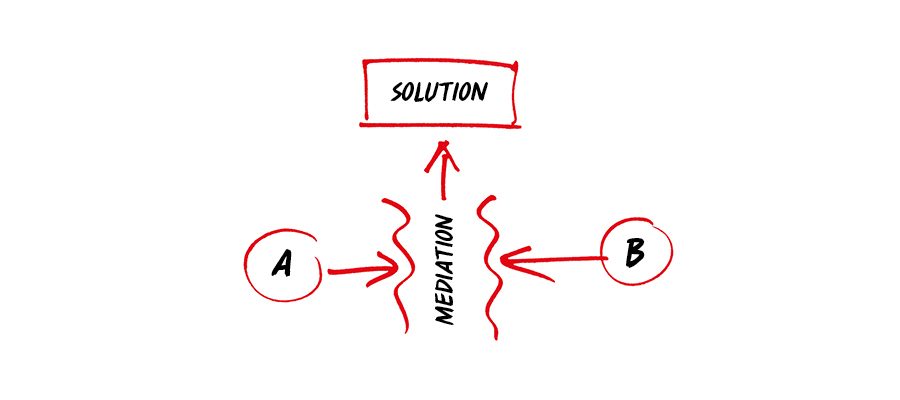

- ARBITRATION

- problems